Kinect device is a depth sensing input device made by Microsoft for the Xbox 360 game console and is one of the hottest devices nowadays. It enables users to control and interact with the Xbox 360 without the need of a game controller. It contains a regular color camera, sending images of 640*480 pixels 30 times a second. It also contains an active-sensing depth camera using a structured light approach, which also sends (depth) images of 640*480 pixels 30 times/second and multi-array microphones which provide full-body 3D motion capture, facial recognition and voice recognition capabilities.

A. The Goal of the Project

The initial goal for this project was to build a system able to recognize the movement of the hands over a particular space such as a table. This was achieved by using the kinect device with appropriate algorithms. In general, we processed the depth images from the kinect device and compared them with a calibrated image in order to recognize the movements of the hands.

B. Components

Our system implementation required the following equipment:

-

Computer

-

Kinect device

-

Table

-

Improvised mechanism

We connected the kinect device with the computer and with the help of the improvised mechanism we managed to put it exactly above the table as you can see in the following picture:

Improvised mechanism

C. Software

We used the following software tools:

-

Microsoft Visual Studio 2010

-

Microsoft Kinect SDK

-

OpenCV 2.3.1

-

OpenGL

D. Hands detection with OpenCV

Basic idea

We retrieve images and data from the kinect sensor. The data include the distance of an object from the sensor and we display them as a greyscale image. As an object gets closer to the sensor the brighter it becomes.We process the retrieved images with the functions of the openCV to detect the hands and then we combine them with the distance information from the depth sensor. In the end we display the hands in openGL graphics.

Definitions:

- Background image: The background image is the result of the average of 50 frames in the initialization time.

- Table image: When we obtain our background image we binarize it (pixels with value > 150 becomes 255 or else 0) and floodfill the most central pixel (with newVal=200). After that we compare the thresholded background image with a constant (=200) .We erode the previous result and we get the table.

In order to have the hands detected we use the difference of the receiving frames from the background image and then we compare this difference with the image that contains the table.

At this point we have detected everything that is above the table. After that, we find the blobs. Every blob that is above 100 pixels we consider it as a hand.

E. Results

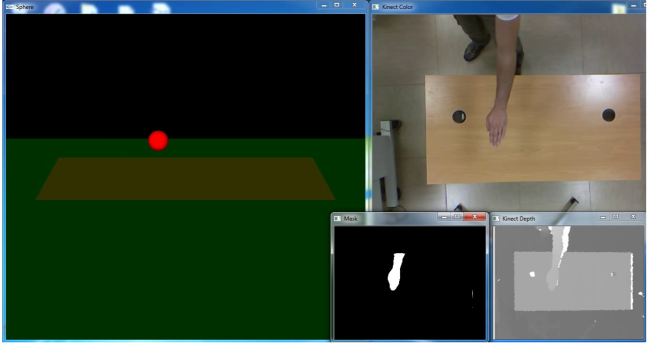

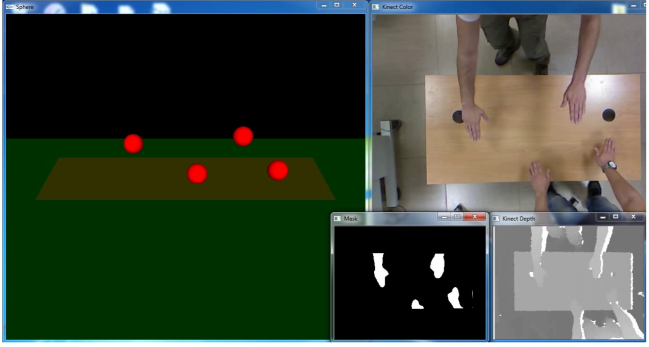

We managed to create a complete application, which is able to detect the hands in a particular area. The application can detect the hands (one or more) over the table, the movement of the hands and the high of the hands. All these are represented in a 3D scene as shown in the pictures below. We have 4 windows:

-

Sphere window shows the 3D representation of the hand

-

Kinect color window shows the image from the color camera

-

Kinect depth window shows the depth representation

-

Mask window shows everything that is above the table

Detection of one hand

Detection of two hands

Detection of four hands

Video

Hands detection with OpenCV

–>